Amid disinformation campaign take-downs, Facebook employees, execs clash on how to handle political ads

Facebook CEO Mark Zuckerberg has spent much of the past two weeks telling Congress and reporters that the world’s dominant social network is doing all that it can to stop disinformation campaigns. In the absence of a “disagree” option, social-media influence experts, politicians, and even Facebook employees have been responding to his statements with “Haha,” “Wow,” “Sad,” and “Angry” emoticons.

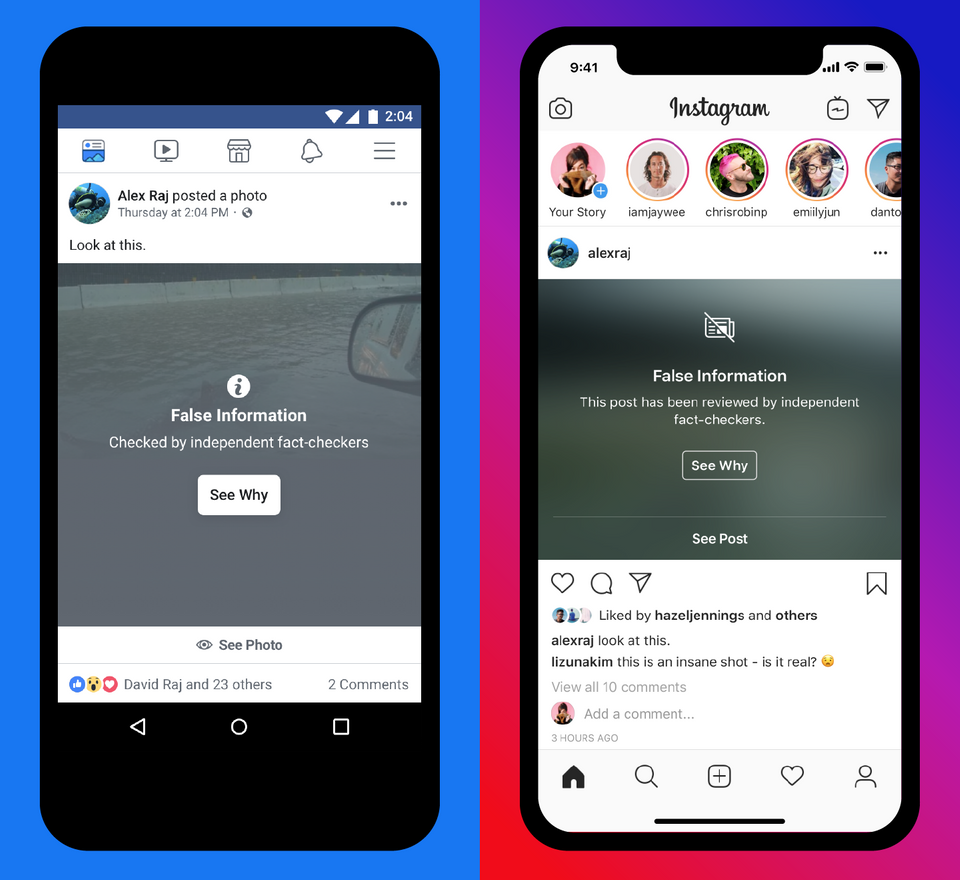

The Menlo Park, Calif.-based company has made what appears to be a plethora of changes to improve security and stop misinformation (and its intentionally misleading subset, disinformation) from spreading. The company algorithmically downgrades factually incorrect statements (unless they come from a politician). It spends more per year on cybersecurity now than its companywide revenue of $5 billion at the time of its IPO in 2012. It offers special protections to politicians to keep their accounts safe. And it labels state-sponsored media as such when shared. It also is working to shut down disinformation campaigns originating abroad.

On October 21, Zuckerberg held a press call to say his company had just taken down four networks of “accounts, Pages, and Groups on Facebook and Instagram for engaging in coordinated inauthentic behavior” by promoting misleading and false news stories across hundreds of accounts, pages, and ads. Those disinformation campaigns, three originating in Iran and one in Russia, targeted the United States, Latin America, and North Africa with networks of accounts designed to mislead followers about “who they were and what they were doing,” wrote Nathaniel Gleicher, Facebook’s cybersecurity policy chief.

“As always, with these takedowns, we’re removing these operations for the deceptive behavior they engaged in, not for the content they shared,” Gleicher said on the press call. Zuckerberg touted the action as a successful part of Facebook’s strategy to fight the viral spread of misinformation, and described the company as “more prepared now” than in 2016. “We see much cleaner results,” he said.

READ MORE ON FACEBOOK SECURITY AND PRIVACY

- Ready to #DeleteFacebook? Follow these 7 steps

- What’s in your Facebook data? More than you think

- Here’s what Facebook shared about you in its December 2018 scandal

- How to tell you’re part of the 30 million user Facebook breach

- Facebook was breached in September 2018. Here’s what we know (and don’t)

- How to recover from a Facebook hack

- 7 ways to boost your Facebook privacy

- How to block Facebook (and others) from your microphone

- Facebook, EFF security experts sound off on protecting the vulnerable

And on October 30, Facebook and the Stanford Internet Observatory, cofounded and run by former Facebook CISO Alex Stamos, announced the take-down of three Russian disinformation campaign networks spanning more than 200 accounts to target voters in eight African countries, all tied to Yevgeniy Prigozhin, a Russian businessman indicted by U.S. special prosecutor Robert Mueller in 2018.

Facebook’s actions and Zuckerberg’s words are not enough, Facebook employees said in an internal letter shared with The New York Times and published on Monday. The spread of disinformation will continue to be a problem on Facebook until the company takes more forceful steps to stop it, they say, and recent studies indicate that a growing distrust of content popping up in “news feeds” might be leading to less engagement on Facebook overall. According to Activate, Facebook usage in the United States is down 26 percent between 2017 and 2019. And according to eMarketer, younger consumers are gravitating toward social networks such as Snap, TikTok, and Facebook’s Instagram that are more focused on visuals and personal experiences than Facebook (and less focused on sharing news and political opinions).

“Facebook stands for people expressing their voice,” the employees wrote. “We’re reaching out to you, the leaders of this company, because we’re worried we’re on track to undo the great strides our product teams have made in integrity over the last two years.”

Facebook versus its employees

The Facebook employees demand that the company hold political ads factually accountable, a direct contradiction of Zuckerberg’s stated desires; that it restrict the practice of targeting political ads to specific Facebook users and moreover prevent politicians from uploading voter rolls to target specific voters; that it cap the amount of money that individual politicians can spend on Facebook advertising; that it broadly adhere to election silence periods in communities that support them; and that it more visually label political ads as such to Facebook users.

“[W]e’re worried we’re on track to undo the great strides our product teams have made in integrity over the last two years.”—Employee letter to Facebook executives.

Facebook said last month that it would not be an arbiter of acceptable speech in the political arena, thus not taking down political ads that violate its policies nor even label them as political ads. And in an address to Georgetown University this month, Zuckerberg argued that he’d co-founded Facebook in part to give people a voice—and that censoring political ads, regardless of their content, would hamper free speech.

“Free speech and paid speech are not the same thing,” the Facebook employees wrote in their letter.

At the time it published the letter, the Times says only about 250 employees had signed it. That’s a tiny fraction of Facebook’s more than 35,000 employees, but it could reflect the start of an internal rebellion against the company’s policies—similar to internal protests at Google over company protection of Android co-founder Andy Rubin against sexual-assault allegations that eventually led to more than 20,000 employees staging a global walkout in November 2018.

The letter also comes on the heels of revelations that Facebook’s leaders have continued to tacitly approve of disinformation campaigns while misleading the public about its efforts against them. In testimony on October 23, Zuckerberg told Rep. Alexandria Ocasio-Cortez (D-N.Y.) that he learned of Cambridge Analytica’s Facebook data-mining efforts in March 2018, though Facebook closed the loophole that gave the analytics company access to user data in 2015.

Politicians cry foul at Facebook

Following an exchange between Ocasio-Cortez and Zuckerberg, in which she asked him whether she could get away with targeting Facebook ads at Republicans that falsely characterize GOP support for the Green New Deal, an environmental-infrastructure proposal she has been promoting, and to which he said he didn’t give a definitive response, a left-leaning group ran an ad doing just that—and Facebook promptly banned it.

Facebook also says it will continue to fact-check a registered Democrat who declared his candidacy for California governor in 2022 solely to evade Facebook’s policy of not fact-checking politicians’ ads.

Other declarations by Facebook executives are starting to look like part of a corporate disinformation campaign. One claim is that Zuckerberg has sought input from across the political spectrum at private dinners; it is unclear whether he’s actually invited any leftists to dinner. Another is that company actions and policies favor neither liberal nor conservative ideas; it appears that they are actually punishing left-leaning news Pages while promoting right-leaning ones. Meanwhile Facebook’s top Washington lobbyists have all been promoting GOP agendas.

“It’s not about the money; it’s about preserving the business model—that anyone can advertise to anyone else. Any limits to that is a limit to the fundamentals.”—David Carroll, media design professor, The New School, Manhattan.

The letter to Facebook’s top brass represents a change in the debate “at the core of the controversy,” says David Carroll, a media design professor at The New School in Manhattan, who has seen some success in suing Cambridge Analytica for all of the data it collected about him. For the first time, Facebook employees are en masse publicizing their discomfort with some of the ways Facebook engages in the advertising that is central to its bottom line.

Facebook’s reticence to change

Even though political ads made up only 0.3 percent to 0.5 percent of its revenue during the 2016 and 2018 U.S. national-election cycles, a clamp-down on disinformation campaigns on Facebook could create a pathway to call the entire company’s main revenue stream into question, Carroll says.

“It’s not about the money; it’s about preserving the business model—that anyone can advertise to anyone else. Any limits to that is a limit to the fundamentals,” Carroll says.

To back up his assertion, Carroll points to the hoops Facebook forces its users to jump through to control the ads they see. Users, he says, only have after-the-fact controls over the marketers that can advertise to them. They cannot opt out of being pushed ads altogether and rather are able to block only one specific ad, upon viewing it, at a time.

What he’d like to see is “verification and transparency” in Facebook’s political ads. Currently, “you don’t know when you look at an ad that it was targeted [to] custom audiences,” he says. “The employees don’t call for that, specifically, but [Facebook] should let you know that this ad was targeted to you, a name, not a demographic or segmentation. You’re not anonymous. That should be surfaced all the way at the top” of the ad.

Where verification, transparency, and disinformation meet Facebook

That’s not the only change Facebook needs to make in order to highlight the tendrils of disinformation campaigns running through its ad network, says Dave Troy, a Baltimore, Md.-based serial entrepreneur with a history of social activism and an expert observer of social-network manipulation.

“The goal is to divide people into groups… to make us more loyal to our little groups and prevent us from getting together to solve our problems.”—Dave Troy, social-network manipulation researcher.

In order for verification and transparency to have any effect, Facebook needs to change how Pages and advertisers are weighted by the algorithm. Troy wants older stories, whose continued spread is now a common enough problem that news parody sites have taken up the crusade against them, to be flagged as such. And he wants the company to expand its requirement that advertisers who want to mention a specific political candidate first verify their identities by using a PIN mailed to them by physical postcard to any Page, Group, or individual that wants to take out any political ad.

“The more trust, the more rights,” Troy says. He argues that disinformation campaigns have a larger goal than just getting people to believe in a spurious fact, or to vote for a specific politician or piece of legislation.

“The goal is to divide people into groups,” he says, “to make us more loyal to our little groups and prevent us from getting together to solve our problems.” That echoes comments made by Christopher Wylie, a Cambridge Analytica whistleblower, who told NPR that the company used “large amounts of highly granular data” it collected on U.S. voters to “target groups of people, particularly on the fringes of society,” who would be susceptible to manipulative messages.

“They focused a lot on disinformation,” Wylie said.

Facebook also needs wise up to the use of “true information” in disinformation campaigns, Troy says. He points to a Facebook-publicized event over the summer following a verbal attack by President Donald Trump on Congressman Elijah Cummings, the longtime Democratic representative from Baltimore who recently passed away, during which Trump supporters swept into Baltimore to “clean up” trash in the city.

“Facebook should know that [those posts] were part of a coordinated activity” and alert its users to that fact, Troy says. The company should also be flagging pages like the cooking-focused Taste Life and the home improvement-focused DIY Crafts that have hundreds of thousands or millions of followers but have “no providence information,” “no linked website,” and “no obvious reason why it exists,” he says.

“Over time, there’s an opportunity for [group administrators] to inject content into those channels. And you can manipulate [members] in certain ways. People who are watching cooking videos are probably representational of certain demographics. You can send them content unrelated to that page,” he says. “It’s a nefarious mechanism, and you have no idea why it exists or who’s doing it.”

Without changes like these, Facebook will continue to attract, host, and spread disinformation campaigns, Carroll says.

“All burden is on the user,” he says, while advertisers and marketers—including those behind disinformation campaigns—are allowed to hide their identities and, crucially, their sources of funding. Users “don’t get any privacy in the system, but advertisers and campaigns do,” Carroll says. “It’s not the right of free speech, it’s the right of free reach.”

Update, October 30 at 10:35 a.m. PST, with details on a second disinformation campaign network take-down.