Debate over data security conflates tech and legal issues

SAN FRANCISCO—Should tech companies be allowed to shield customer data from government investigators? The hot-button issue pits sovereign states and stateside law enforcement agencies against captains of Silicon Valley such as Google, Facebook, and Apple, as they seek to access company-controlled consumer data, often for criminal investigations.

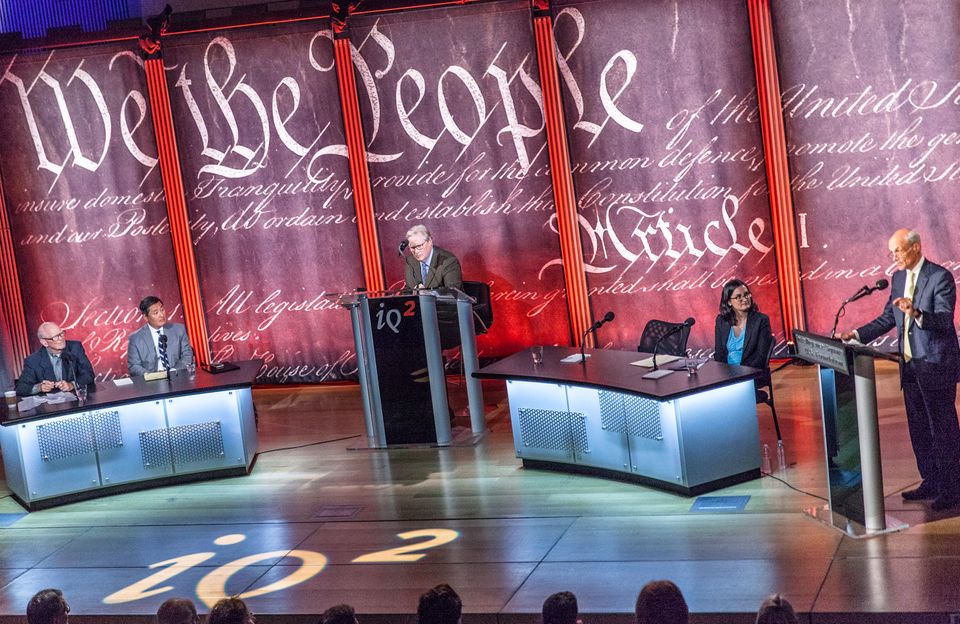

The debate was also the focus of the most recent Intelligence Squared U.S., a 10-year-old, award-winning podcast debate series, recorded in front of a live audience at the SFJazz Center on June 6. Arguing that government agencies have the right to demand all customer data from tech companies were John Yoo, a University of California at Berkeley professor who infamously drafted President George W. Bush’s legal memo authorizing government torture of wartime detainees, and Stewart Baker, former general counsel for the National Security Agency and the first assistant secretary for policy at the Department of Homeland Security.

Catherine Crump, former staff attorney at the American Civil Liberties Union, and Michael Chertoff, former secretary of homeland security and, ironically, the architect of the Patriot Act, argued that tech companies should be allowed some leeway to protect customer data from government demands.

During Intelligence Squared U.S. events, which millions of people eventually listen to through podcasts, Web streams, and public radio broadcasts, the studio audience votes on the debate’s question, then gives each side of the debate a chance to make statements, followed by questions from a moderator, questions from the audience, closing statements, and a final audience vote. In Oxford debate style, the winner is the side that garners a larger percentage of audience vote changes.

For this debate, the audience was slightly more swayed by the defenders of corporate responsibility than by the advocates of government authority, with an 11 percent vote change for the former and a 10 percent vote change for the latter.

From my seat, the debate felt like a frustrating misfire, thanks to a common error when discussing the intersection of technology and law: Tech issues like what encryption does were conflated with legal ones like how government attempts to regulate encryption. And despite clear attempts, no debater seemed quite versed enough in the technological complexities to accurately explain why encryption is important in the first place.

But a spirited discussion about the legal rights a company may exercise when served with a warrant demanding access would have been far more interesting than listening to lawyers debate what encryption can or can’t accomplish.

As a consequence, the debaters didn’t spend enough time discussing the topic through the lens of their expertise: the legal rights and responsibilities tech companies have to their customers’ data privacy, as well as to the government jurisdictions in which they operate. Instead, they dove headlong into the technical abilities of using encryption to prevent government agencies from accessing consumer data without a legal warrant.

Companies such as Apple have certainly found value in using encryption that they don’t have the mathematical key to decode for law enforcement purposes. And there’s still a case study value in mining last year’s FBI demands, and ultimate workaround, for Apple to unlock an encrypted iPhone. But those technical issues weren’t the ostensible focus of the debate; if they had been, there would have been a technology expert on stage.

The debate didn’t completely ignore the legal issues at stake. Yoo pulled out a pocket edition of the Constitution to cite the Fourth Amendment. And Baker noted that federal and state law enforcement agencies had worked with Apple to unlock phones prior to the San Bernardino case. But the legal discussion quickly gave way to the technological, from vendor encryption to government involvement in security flaw exploits, and barely touched on more important issues related to the central question, such as privacy.

The cynics among us may flippantly deride privacy as being as dead as Travis Kalanick’s tenure as Uber’s CEO, echoing Facebook CEO Mark Zuckerberg’s 2010 comments that “privacy is no longer a social norm.” But the Fourth Amendment of the Constitution—the source of U.S. legal privacy protections—has yet to be repealed. And cases the U.S. Supreme Court decided in 2011 and 2013 belie Zuckerberg’s assertion.

One legal conflict the experts flicked at but didn’t truly explore was whether a government body can force companies to “backdoor” their own encryption, as England is currently debating, essentially creating a mathematical hole for law enforcement to exploit. The technical debate over the conceptual government backdoor, a “golden key” championed by former FBI Director Jim Comey, surrounds whether a flaw in encryption created for law enforcement is a flaw in encryption created for all to exploit. This differs from the legal debate: Can a government agency force private businesses to write code? And if so, what would the consequences be?

The debaters also failed to review the legal history and context of government demands for consumer data, and where cases stand now. Perhaps that was simply the preference of moderator John Donvan, an award-winning journalist. But a spirited discussion about the legal rights a company may exercise when served with a warrant demanding access would have been far more interesting than listening to lawyers debate what encryption can or can’t accomplish.

The debate successfully captured the attention of several hundred people in attendance, but it missed its mark. There’s far more to advancing public understanding of complex security issues—particularly as it relates to government involvement—than just “use Signal” and “use Tor.”